A group of IT researchers has uncovered a significant vulnerability in Apple’s much-hyped Vision Pro headset, one that could have alarming implications for user privacy and security. Dubbed GAZEploit, this flaw targets the device’s gaze-tracking technology, potentially allowing hackers to intercept sensitive data like passwords, PIN codes, and emails. The revelation raises serious questions about the cybersecurity of emerging wearable tech, particularly in devices relying on cutting-edge, yet complex, input methods.

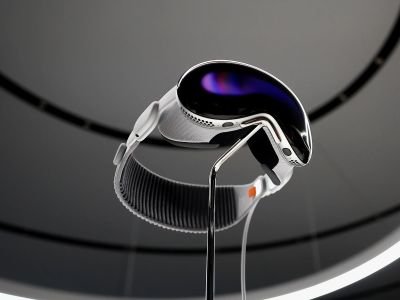

Apple’s Vision Pro, which merges augmented reality with virtual spaces, has positioned itself as a game-changer in both the tech and entertainment industries. But the discovery of GAZEploit shows that the next generation of wearables may also bring a new class of security concerns.

How GAZEploit Works: Turning a Feature into a Security Flaw

The core of the GAZEploit vulnerability lies in Apple Vision Pro’s gaze-tracking technology. This innovative feature allows users to navigate the interface and enter text simply by looking at virtual keys on a virtual keyboard. However, the researchers found that this same feature could be exploited to infer what a user is typing—without needing direct access to the device.

Using AI-based analysis and geometric calculations, the research team was able to track the eye movements of a virtual avatar created by the headset during activities like video calls. This allowed them to approximate the user’s gaze as it landed on specific keys on the virtual keyboard, thus revealing the text being typed. With five attempts, they were able to achieve a 77% success rate in cracking passwords and up to 92% accuracy in deciphering messages.

What makes this attack particularly insidious is that it doesn’t require physical access to the Vision Pro headset. Hackers can potentially glean data from virtual interactions, making it a serious concern in remote and online scenarios where the headset is used for communication.

The Power of Neural Networks in Hacking

The researchers achieved this accuracy using a specially trained neural network designed to map eye movements to virtual keyboard inputs. The neural network, combined with precise geometric calculations of keyboard placement and size, allowed the team to track which keys the user was focusing on. Even the use of duplicate letters and occasional typos didn’t significantly derail the process, as the AI could adapt to these variables and continue deciphering the input.

Interestingly, this method wasn’t confined to passwords. It extended to messages, email addresses, URLs, and PIN codes—essentially any text input made through the Vision Pro’s virtual keyboard. The high degree of accuracy in this lab setting indicates a very real threat to user privacy, especially as hackers develop more sophisticated methods for exploiting these kinds of vulnerabilities.

Delayed Response from Apple

Despite the gravity of the vulnerability, Apple was reportedly informed about GAZEploit back in April. However, the patch to fix the flaw only came in July, with the release of VisionOS 1.3. While there are no reported cases of this vulnerability being exploited in real-world scenarios yet, the delayed fix does raise concerns about the timeliness of security responses from tech companies when faced with critical issues in high-profile devices.

For a company like Apple, which is renowned for its stringent privacy and security measures, this delay is surprising. The Vision Pro is a flagship product for Apple, marking its entrance into the mixed-reality space. Ensuring its users are protected from vulnerabilities like GAZEploit should have been an immediate priority.

Implications for the Future of Wearable Tech

GAZEploit has exposed a significant issue for the future of wearable tech. As devices like the Vision Pro become more integrated into everyday life, with users typing, communicating, and interacting in virtual environments, new vectors for cyber-attacks will inevitably emerge. Features that rely on biometric data, like eye-tracking, pose a unique set of challenges, as this kind of data is inherently more difficult to secure.

Apple’s Vision Pro is likely just the beginning. As more companies develop mixed reality headsets and AR/VR devices, the cybersecurity industry will need to evolve to address these complex new threats. Today, it’s eye-tracking—tomorrow, it could be hand gestures or even brainwave inputs.

Looking Ahead

The GAZEploit vulnerability is a stark reminder that even the most advanced tech is susceptible to exploitation. While Apple has taken steps to address the issue with a software patch, the discovery suggests that there may be more weaknesses in the Vision Pro’s functionality—or in other AR/VR systems waiting to be uncovered.

The onus is now on manufacturers to rigorously test and secure their devices, especially those that push the boundaries of human-machine interaction. For users, this means staying vigilant, updating devices regularly, and being cautious when inputting sensitive information in virtual environments.

Apple’s Vision Pro is a remarkable device, packed with potential. However, as GAZEploit demonstrates, the race to develop cutting-edge technology must always be matched with an equally intense focus on security and privacy.